最近做的一个项目需求是要实现视频的边缓存边播放,还要能实现视频内部的跳转,实际来讲就是视频点播,只不过要通过http协议来实现,也即用户可以拖动seekbar跳转到播放指定时间点的视频,要实现这样的功能,那么就需要提前解析视频的关键信息,得到时间点对应的视频流的内部偏移量,这样当进行实际跳转的时候就能根据跳转的时间点转换到文件内部的偏移量,进而进行视频流内容的缓存下载。

至于如何实现视频的边缓存边播放,可以参照我的博文《Android视频播放之边缓存边播放》和 《Android 视频播放之流媒体格式处理》,本文的目的是给大家展示怎么样进行视频数据流的解析.

前期准备

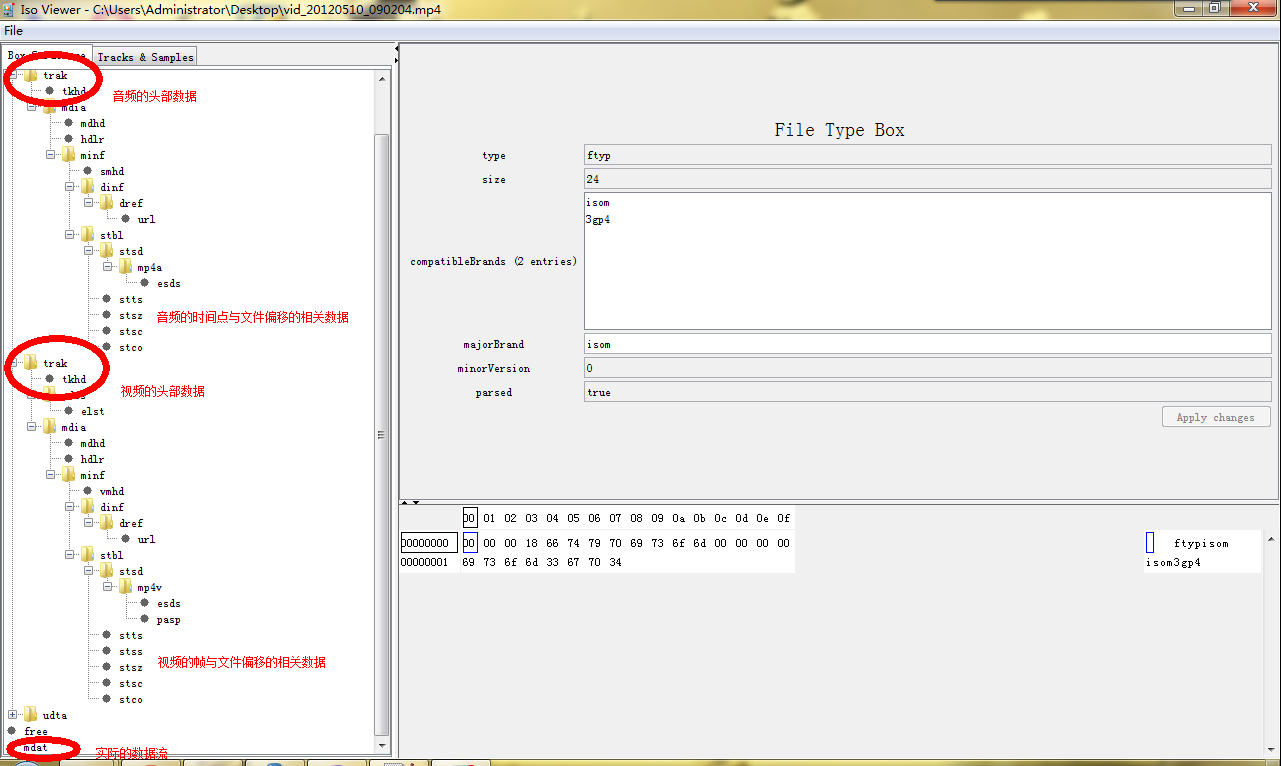

依赖的第三方解析包 mp4parser,Google的项目地址为 http://code.google.com/p/mp4parser/,它主要实现了mp4和其他格式媒体数据的解析,本文的解析代码就是依赖于它底层的数据解析,项目中有一个图形化界面的解析器,下载地址为 http://mp4parser.googlecode.com/files/isoviewer-2.0-RC-7.jar,可以通过它来查看视频文件的整体编码结构。

通过在命令行执行

F:\workspace\mp4parser-read-only>java -jar isoviewer-2.0-RC-7.jar

来启动程序,然后通过菜单的File导入需要解析的视频文件就好了

截图如下:

本文代码依赖的jar包如下:

- http://carey-blog-image.googlecode.com/files/aspectjrt.jar

- http://carey-blog-image.googlecode.com/files/isoparser-1.0-RC-6-20120510.091755-1.jar

关键代码解析

1. 计算视频的总时长

视频的总时间轴 Duration 除以时间轴的比例 Timescale,结果就是当前视频的总秒数

1 | lengthInSeconds = (double) isoFile.getMovieBox().getMovieHeaderBox().getDuration() / isoFile.getMovieBox().getMovieHeaderBox().getTimescale(); |

lengthInSeconds = (double) isoFile.getMovieBox().getMovieHeaderBox().getDuration() / isoFile.getMovieBox().getMovieHeaderBox().getTimescale();

2. 获取音频和视频的box数据

一般的流媒体数据有两个TrackBox,第一个为音频,第二个为视频

1 | List<TrackBox> trackBoxes = isoFile.getMovieBox().getBoxes(TrackBox.class); |

List<TrackBox> trackBoxes = isoFile.getMovieBox().getBoxes(TrackBox.class);

3. 解析视频的box数据

取得关键帧的信息,因为视频的跳转只能在关键帧之间跳转,所以我们需要重点关注关键帧对应的时间点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | Iterator<TrackBox> iterator = trackBoxes.iterator(); SampleTableBox stbl = null; TrackBox trackBox = null; while (iterator.hasNext()) { trackBox = iterator.next(); stbl = trackBox.getMediaBox().getMediaInformationBox().getSampleTableBox(); // 我们只处理视频的关键帧,音频数据略过 if (stbl.getSyncSampleBox() != null) { syncSamples = stbl.getSyncSampleBox().getSampleNumber(); syncSamplesSize = new long[syncSamples.length]; syncSamplesOffset = new long[syncSamples.length]; timeOfSyncSamples = new double[syncSamples.length]; break; } } |

Iterator<TrackBox> iterator = trackBoxes.iterator();

SampleTableBox stbl = null;

TrackBox trackBox = null;

while (iterator.hasNext()) {

trackBox = iterator.next();

stbl = trackBox.getMediaBox().getMediaInformationBox().getSampleTableBox();

// 我们只处理视频的关键帧,音频数据略过

if (stbl.getSyncSampleBox() != null) {

syncSamples = stbl.getSyncSampleBox().getSampleNumber();

syncSamplesSize = new long[syncSamples.length];

syncSamplesOffset = new long[syncSamples.length];

timeOfSyncSamples = new double[syncSamples.length];

break;

}

}4. 获取视频box中内部的详细参数

包括帧与时间的对应关系,即每一帧对应视频的时间点是第几秒,帧与chunk的对应关系,mp4为了进行视频的压缩,都是把一系列连续的帧放到一个chunk中,每个chunk中帧的数量不等,chunk与数据流文件偏移的关系,即每个chunk对应的视频流数据在文件的具体偏移位置。

1 2 3 4 | // first we get all sample from the 'normal' MP4 part. if there are none - no problem. SampleSizeBox sampleSizeBox = trackBox.getSampleTableBox().getSampleSizeBox(); ChunkOffsetBox chunkOffsetBox = trackBox.getSampleTableBox().getChunkOffsetBox(); SampleToChunkBox sampleToChunkBox = trackBox.getSampleTableBox().getSampleToChunkBox(); |

// first we get all sample from the 'normal' MP4 part. if there are none - no problem. SampleSizeBox sampleSizeBox = trackBox.getSampleTableBox().getSampleSizeBox(); ChunkOffsetBox chunkOffsetBox = trackBox.getSampleTableBox().getChunkOffsetBox(); SampleToChunkBox sampleToChunkBox = trackBox.getSampleTableBox().getSampleToChunkBox();

5. 计算偏移量

根据视频的上述信息计算每一个帧对应的具体时间点,以及其数据流在文件中的偏移量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 | if (sampleToChunkBox != null && sampleToChunkBox.getEntries().size() > 0 && chunkOffsetBox != null && chunkOffsetBox.getChunkOffsets().length > 0 && sampleSizeBox != null && sampleSizeBox.getSampleCount() > 0) { long[] numberOfSamplesInChunk = sampleToChunkBox.blowup(chunkOffsetBox.getChunkOffsets().length); if (sampleSizeBox.getSampleSize() > 0) { // Every sample has the same size! no need to store each size separately // this happens when people use raw audio formats in MP4 (are you stupid guys???) int sampleIndex = 0; long sampleSize = sampleSizeBox.getSampleSize(); for (int i = 0; i < numberOfSamplesInChunk.length; i++) { long thisChunksNumberOfSamples = numberOfSamplesInChunk[i]; long sampleOffset = chunkOffsetBox.getChunkOffsets()[i]; for (int j = 0; j < thisChunksNumberOfSamples; j++) { // samples always start with 1 but we start with zero // therefore +1 int syncSamplesIndex = Arrays.binarySearch(syncSamples,sampleIndex + 1); if (syncSamplesIndex >= 0) { syncSamplesOffset[syncSamplesIndex] = sampleOffset; syncSamplesSize[syncSamplesIndex] = sampleSize; } sampleOffset += sampleSize; sampleIndex++; } } } else { // the normal case where all samples have different sizes int sampleIndex = 0; long sampleSizes[] = sampleSizeBox.getSampleSizes(); for (int i = 0; i < numberOfSamplesInChunk.length; i++) { long thisChunksNumberOfSamples = numberOfSamplesInChunk[i]; long sampleOffset = chunkOffsetBox.getChunkOffsets()[i]; for (int j = 0; j < thisChunksNumberOfSamples; j++) { long sampleSize = sampleSizes[sampleIndex]; // samples always start with 1 but we start with zero therefore +1 int syncSamplesIndex = Arrays.binarySearch(syncSamples, sampleIndex + 1); if (syncSamplesIndex >= 0) { syncSamplesOffset[syncSamplesIndex] = sampleOffset; syncSamplesSize[syncSamplesIndex] = sampleSize; } sampleOffset += sampleSize; sampleIndex++; } } } MediaHeaderBox mdhd = trackBox.getMediaBox().getMediaHeaderBox(); TrackHeaderBox tkhd = trackBox.getTrackHeaderBox(); trackMetaData.setTrackId(tkhd.getTrackId()); trackMetaData.setCreationTime(DateHelper.convert(mdhd.getCreationTime())); trackMetaData.setLanguage(mdhd.getLanguage()); trackMetaData.setModificationTime(DateHelper.convert(mdhd.getModificationTime())); trackMetaData.setTimescale(mdhd.getTimescale()); trackMetaData.setHeight(tkhd.getHeight()); trackMetaData.setWidth(tkhd.getWidth()); trackMetaData.setLayer(tkhd.getLayer()); List<TimeToSampleBox.Entry> decodingTimeEntries = null; if (trackBox.getParent().getBoxes(MovieExtendsBox.class).size() > 0) { decodingTimeEntries = new LinkedList<TimeToSampleBox.Entry>(); for (MovieFragmentBox movieFragmentBox : trackBox.getIsoFile().getBoxes(MovieFragmentBox.class)) { List<TrackFragmentBox> trafs = movieFragmentBox.getBoxes(TrackFragmentBox.class); for (TrackFragmentBox traf : trafs) { if (traf.getTrackFragmentHeaderBox().getTrackId() == trackBox.getTrackHeaderBox().getTrackId()) { List<TrackRunBox> truns = traf.getBoxes(TrackRunBox.class); for (TrackRunBox trun : truns) { for (TrackRunBox.Entry entry : trun.getEntries()) { if (trun.isSampleDurationPresent()) { if (decodingTimeEntries.size() == 0 || decodingTimeEntries.get(decodingTimeEntries.size() - 1).getDelta() != entry.getSampleDuration()) { decodingTimeEntries.add(new TimeToSampleBox.Entry(1,entry.getSampleDuration())); } else { TimeToSampleBox.Entry e = decodingTimeEntries.get(decodingTimeEntries.size() - 1); e.setCount(e.getCount() + 1); } } } } } } } } else { decodingTimeEntries = stbl.getTimeToSampleBox().getEntries(); } long currentSample = 0; double currentTime = 0; for (TimeToSampleBox.Entry entry : decodingTimeEntries) { for (int j = 0; j < entry.getCount(); j++) { int syncSamplesIndex = Arrays.binarySearch(syncSamples,currentSample + 1); if (syncSamplesIndex >= 0) { // samples always start with 1 but we start with zero therefore +1 timeOfSyncSamples[syncSamplesIndex] = currentTime; } currentTime += (double) entry.getDelta() / (double) trackMetaData.getTimescale(); currentSample++; } } } |

if (sampleToChunkBox != null

&& sampleToChunkBox.getEntries().size() > 0

&& chunkOffsetBox != null

&& chunkOffsetBox.getChunkOffsets().length > 0

&& sampleSizeBox != null && sampleSizeBox.getSampleCount() > 0) {

long[] numberOfSamplesInChunk = sampleToChunkBox.blowup(chunkOffsetBox.getChunkOffsets().length);

if (sampleSizeBox.getSampleSize() > 0) {

// Every sample has the same size! no need to store each size separately

// this happens when people use raw audio formats in MP4 (are you stupid guys???)

int sampleIndex = 0;

long sampleSize = sampleSizeBox.getSampleSize();

for (int i = 0; i < numberOfSamplesInChunk.length; i++) {

long thisChunksNumberOfSamples = numberOfSamplesInChunk[i];

long sampleOffset = chunkOffsetBox.getChunkOffsets()[i];

for (int j = 0; j < thisChunksNumberOfSamples; j++) {

// samples always start with 1 but we start with zero

// therefore +1

int syncSamplesIndex = Arrays.binarySearch(syncSamples,sampleIndex + 1);

if (syncSamplesIndex >= 0) {

syncSamplesOffset[syncSamplesIndex] = sampleOffset;

syncSamplesSize[syncSamplesIndex] = sampleSize;

}

sampleOffset += sampleSize;

sampleIndex++;

}

}

} else {

// the normal case where all samples have different sizes

int sampleIndex = 0;

long sampleSizes[] = sampleSizeBox.getSampleSizes();

for (int i = 0; i < numberOfSamplesInChunk.length; i++) {

long thisChunksNumberOfSamples = numberOfSamplesInChunk[i];

long sampleOffset = chunkOffsetBox.getChunkOffsets()[i];

for (int j = 0; j < thisChunksNumberOfSamples; j++) {

long sampleSize = sampleSizes[sampleIndex];

// samples always start with 1 but we start with zero therefore +1

int syncSamplesIndex = Arrays.binarySearch(syncSamples, sampleIndex + 1);

if (syncSamplesIndex >= 0) {

syncSamplesOffset[syncSamplesIndex] = sampleOffset;

syncSamplesSize[syncSamplesIndex] = sampleSize;

}

sampleOffset += sampleSize;

sampleIndex++;

}

}

}

MediaHeaderBox mdhd = trackBox.getMediaBox().getMediaHeaderBox();

TrackHeaderBox tkhd = trackBox.getTrackHeaderBox();

trackMetaData.setTrackId(tkhd.getTrackId());

trackMetaData.setCreationTime(DateHelper.convert(mdhd.getCreationTime()));

trackMetaData.setLanguage(mdhd.getLanguage());

trackMetaData.setModificationTime(DateHelper.convert(mdhd.getModificationTime()));

trackMetaData.setTimescale(mdhd.getTimescale());

trackMetaData.setHeight(tkhd.getHeight());

trackMetaData.setWidth(tkhd.getWidth());

trackMetaData.setLayer(tkhd.getLayer());

List<TimeToSampleBox.Entry> decodingTimeEntries = null;

if (trackBox.getParent().getBoxes(MovieExtendsBox.class).size() > 0) {

decodingTimeEntries = new LinkedList<TimeToSampleBox.Entry>();

for (MovieFragmentBox movieFragmentBox : trackBox.getIsoFile().getBoxes(MovieFragmentBox.class)) {

List<TrackFragmentBox> trafs = movieFragmentBox.getBoxes(TrackFragmentBox.class);

for (TrackFragmentBox traf : trafs) {

if (traf.getTrackFragmentHeaderBox().getTrackId() == trackBox.getTrackHeaderBox().getTrackId()) {

List<TrackRunBox> truns = traf.getBoxes(TrackRunBox.class);

for (TrackRunBox trun : truns) {

for (TrackRunBox.Entry entry : trun.getEntries()) {

if (trun.isSampleDurationPresent()) {

if (decodingTimeEntries.size() == 0 || decodingTimeEntries.get(decodingTimeEntries.size() - 1).getDelta() != entry.getSampleDuration()) {

decodingTimeEntries.add(new TimeToSampleBox.Entry(1,entry.getSampleDuration()));

} else {

TimeToSampleBox.Entry e = decodingTimeEntries.get(decodingTimeEntries.size() - 1);

e.setCount(e.getCount() + 1);

}

}

}

}

}

}

}

} else {

decodingTimeEntries = stbl.getTimeToSampleBox().getEntries();

}

long currentSample = 0;

double currentTime = 0;

for (TimeToSampleBox.Entry entry : decodingTimeEntries) {

for (int j = 0; j < entry.getCount(); j++) {

int syncSamplesIndex = Arrays.binarySearch(syncSamples,currentSample + 1);

if (syncSamplesIndex >= 0) {

// samples always start with 1 but we start with zero therefore +1

timeOfSyncSamples[syncSamplesIndex] = currentTime;

}

currentTime += (double) entry.getDelta() / (double) trackMetaData.getTimescale();

currentSample++;

}

}

}6. 在android中运行

1 2 3 4 5 6 7 8 9 10 | public void printinfo() { System.out.println("视频总时长(秒): " + lengthInSeconds); System.out.println("关键帧 \t 帧偏移 \t 帧大小 \t 帧对应的时间"); int size = syncSamples.length; for (int i = 0; i < size; i++) { System.out.println(syncSamples[i] + " " + syncSamplesOffset[i] + " " + syncSamplesSize[i] + " " + timeOfSyncSamples[i]); } } |

public void printinfo() {

System.out.println("视频总时长(秒): " + lengthInSeconds);

System.out.println("关键帧 \t 帧偏移 \t 帧大小 \t 帧对应的时间");

int size = syncSamples.length;

for (int i = 0; i < size; i++) {

System.out.println(syncSamples[i] + " " + syncSamplesOffset[i] + " " + syncSamplesSize[i] + " " + timeOfSyncSamples[i]);

}

}解析的结果如下:

05-22 15:21:03.750: I/System.out(5291): remoteUrl: http://bbfile.b0.upaiyun.com/data/videos/2/vid_20120510_090204.mp4 05-22 15:21:03.757: I/System.out(5291): localUrl: /mnt/sdcard/VideoCache/1337671263394.mp4 05-22 15:21:16.343: I/System.out(5291): 视频总时长(秒): 123.636 05-22 15:21:16.343: I/System.out(5291): 关键帧 帧偏移 帧大小 帧对应的时间 05-22 15:21:16.343: I/System.out(5291): 1 405032 1449 0.0 05-22 15:21:16.343: I/System.out(5291): 16 423325 3058 0.5002777777777777 05-22 15:21:16.351: I/System.out(5291): 31 441936 2886 1.0 05-22 15:21:16.351: I/System.out(5291): 46 467978 3575 1.4996777777777774 05-22 15:21:16.351: I/System.out(5291): 61 498237 5578 1.9993999999999998 05-22 15:21:16.351: I/System.out(5291): 76 562086 7654 2.4993444444444437 。。。。。。 05-22 15:21:16.585: I/System.out(5291): 3676 15952210 13153 122.43723333333335 05-22 15:21:16.585: I/System.out(5291): 3691 16050289 11484 122.93692222222225 05-22 15:21:16.585: I/System.out(5291): 3706 16131270 12718 123.4366666666667

通过以上数据可以分析出:

a. 视频的总时长为 123.636s

b. 1s钟时长的视频大约有30个帧,其中有2个关键帧,就拿第1s的视频数据来说,第1和16帧为关键帧,

其对应的时间点分别为0s和0.5s,帧对应的数据流在当前视频文件内部的偏移量分别为 405032

和 423325,视频流数据的大小分别为1449和3058

源代码文件下载 http://carey-blog-image.googlecode.com/files/CareyMp4Parser%2820120522%29.java

好了,视频的解析部分就讲到这里,有什么问题可以给我留言,后续再更新跳转实现的代码部分.

第五部分计算偏移量的代码真心不懂啊,请教,有什么参考资料吗?

谢了..

新跳转实现的代码?

网站新手多多关照